Getting Started¶

Zhenwen Dai (2018.10.22)

# Copyright 2018 Amazon.com, Inc. or its affiliates. All Rights Reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License").

# You may not use this file except in compliance with the License.

# A copy of the License is located at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# or in the "license" file accompanying this file. This file is distributed

# on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either

# express or implied. See the License for the specific language governing

# permissions and limitations under the License.

# ==============================================================================

Introduction¶

MXFusion is a probabilistic programming language. It provides a convenient interface for designing probabilistic models and applying them to real world problems.

Probabilistic models describe the relationships in data through probabilistic distributions of random variables. Probabilistic modeling is typically done by stating your prior belief about the data in terms of a probabilistic model and performing inference with the observations of some of the random variables.

[1]:

import warnings

warnings.filterwarnings('ignore')

import os

os.environ['MXNET_ENGINE_TYPE'] = 'NaiveEngine'

A Simple Example¶

Let’s start with a toy example about estimating the mean and variance of a set of data. For simplicity, we generate 100 data points with a given mean and variance following a normal distribution.

[2]:

import numpy as np

np.random.seed(0)

mean_groundtruth = 3.

variance_groundtruth = 5.

N = 100

data = np.random.randn(N)*np.sqrt(variance_groundtruth) + mean_groundtruth

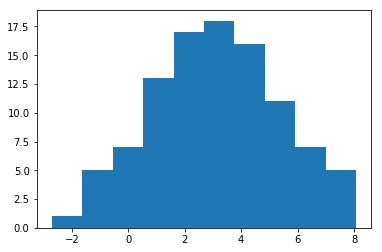

Let’s visualize our data by building a histogram.

[3]:

%matplotlib inline

from pylab import *

_=hist(data, 10)

Now, let’s pretend that we do not know the mean and variance that are used to generate the above data.

We still believe that the data come from a normal distribution, which is our model. It is formulated as

In MXFusion, the above model can be defined as follows:

[4]:

from mxfusion import Variable, Model

from mxfusion.components.variables import PositiveTransformation

from mxfusion.components.distributions import Normal

from mxfusion.common import config

config.DEFAULT_DTYPE = 'float64'

m = Model()

m.mu = Variable()

m.s = Variable(transformation=PositiveTransformation())

m.Y = Normal.define_variable(mean=m.mu, variance=m.s, shape=(N,))

In the above definition, we start with defining a model by instantiated from the class Model. The variable \(\mu\) and \(s\) are created from the class Variable. Both of them are assigned as members of the model instance m. This is how variables are organized in MXFusion. The variable s is created by passing a PositiveTransformation instance to the transforamtion argument. This constrains the value of the variable s to be positive through a “soft-plus” transformation. The variable Y is created from a normal distribution by specifying the mean and variance and its shape.

Note that, in this example, the mean and variance variable are both scalar, with the shape (1,), while the random variable Y has the shape (100,). This indicates the mean and variance variable are broadcasted into the shape of the random variable, just like the broadcasting rule in numpy array operation. In this case, this means the individual entries of the random variable Y follows a scalar normal distribution with the same mean and variance.

To list the content that is defined in the model instance, just print the model instance as follows:

[5]:

print(m)

Y ~ Normal(mean=mu, variance=s)

After defining the probabilistic model, we want to estimate the mean and variance of the normal distribution in our model conditioned on the data that we generated. In MXFusion, this is done by creating an inference algorithm and passing it into the creation of an Inference instance. An inference algorithm represents a specific algorithm for a probabilistic inference. In this example, we performs a maximum likelihood estimate by using the MAP class. The Inference class takes care of the initialization of parameters and the execution of inference.

In the following code, we created a MAP inference algorithm by specifying the model and the set of observed variable. Then, we created a GradBasedInference instance from the instantiated MAP infernece algorithm.

The execution of inference is done by calling the call function. The call function takes all observed data (specified when creating the inference algorithm) as the keyword arguments, where the keys are the names of the member variables of the model and the values are the corresponding MXNet NDArrays. In this example, we only observed the variable Y, then, we pass “Y” as the key and the generated data as the value. We also specify the configuration parameters for the gradient optimizer such as the learning rate, the maximum number of iterations and whether to print the optimization progress. The default optimizer is adam.

[6]:

from mxfusion.inference import GradBasedInference, MAP

import mxnet as mx

infr = GradBasedInference(inference_algorithm=MAP(model=m, observed=[m.Y]))

infr.run(Y=mx.nd.array(data, dtype='float64'), learning_rate=0.1, max_iter=2000, verbose=True)

Iteration 201 loss: 226.00307424753382

Iteration 401 loss: 223.62512496838366

Iteration 601 loss: 223.23108001422028

Iteration 801 loss: 223.16242835266598

Iteration 1001 loss: 223.15215875874755

Iteration 1201 loss: 223.15098355232075

Iteration 1401 loss: 223.15088846215527

Iteration 1601 loss: 223.15088333213185

Iteration 1801 loss: 223.15088315658113

Iteration 2000 loss: 223.15088315295884

After optimization, the estimated parameters are stored in an instance of the class InferenceParameters, which can be access from an Inference instance by infr.params.

We collect the estimated mean and variance and compared with the generating parameters.

[7]:

mean_estimated = infr.params[m.mu].asnumpy()

variance_estimated = infr.params[m.s].asnumpy()

print('The estimated mean and variance: %f, %f.' % (mean_estimated, variance_estimated))

print('The true mean and variance: %f, %f.' % (mean_groundtruth, variance_groundtruth))

The estimated mean and variance: 3.133735, 5.079126.

The true mean and variance: 3.000000, 5.000000.

The estimated parameters are close to the generating parameters, but still off by a small amount. This difference is due to the small size of dataset we used, a problem known as over-fitting.

A Bayesian model¶

From the above example, we have done a maximum likelihood estimate from the observed data. Due to the limited number of data, the estimated parameters are not the same as the true parameters. An interesting question here is that whether we can have an estimate about how big the difference is. One approach to provide such an estimate is via Bayesian inference.

Following the above example, we need to assume prior distributions for the mean and variance of the normal distribution. We assume the mean to be a normal distribution with a relative big variance, indicating that we do not have much knowledge about the parameter.

[8]:

m = Model()

m.mu = Normal.define_variable(mean=mx.nd.array([0], dtype='float64'),

variance=mx.nd.array([100], dtype='float64'), shape=(1,))

Then, we need to specify a prior distribution for the variance. This is a bit more complicated as the variance needs to be positive. In principle, one can use a distribution of positive values such as the Gamma distribution. To enable inference with the reparameterization trick, we, instead, assume a random variable \(\hat{s}\) with a normal distribution and the variance \(s\) is a function of \(\hat{s}\),

To implement the above prior in MXFusion, we first create the variable s_hat with a normal distribution. Then, we defines a function in the MXNet Gluon syntax, which is also called a Gluon block, for the “soft-plus” transformation. The MXNet function is brought into the MXFusion environment by applying a wrapper called MXFusionGluonFunction, in which we specify the number of outputs. We pass the variable s_hat as the input to the function and get the variable s as the return value.

[9]:

from mxfusion.components.functions import MXFusionGluonFunction

m.s_hat = Normal.define_variable(mean=mx.nd.array([5], dtype='float64'),

variance=mx.nd.array([100], dtype='float64'),

shape=(1,), dtype=dtype)

trans_mxnet = mx.gluon.nn.HybridLambda(lambda F, x: F.Activation(x, act_type='softrelu'))

m.trans = MXFusionGluonFunction(trans_mxnet, num_outputs=1, broadcastable=True)

m.s = m.trans(m.s_hat)

We define the variable Y following a normal distribution with the mean mu and the variance s.

[10]:

m.Y = Normal.define_variable(mean=m.mu, variance=m.s, shape=(N,), dtype=dtype)

print(m)

s_hat ~ Normal(mean=Variable(66591), variance=Variable(582f6))

s = GluonFunctionEvaluation(hybridlambda0_input_0=s_hat)

mu ~ Normal(mean=Variable(230f5), variance=Variable(e5c1f))

Y ~ Normal(mean=mu, variance=s)

Inference for the above model is more complex, as the exact inference is intractable. We use variational inference with a Gaussian mean field posterior.

We construct the variational posterior by calling the function create_Gaussian_meanfield, which defines a Gaussian distribution for both the mean and the variance as the variational posterior. The content in the generated posterior can be listed by printing the posterior.

[11]:

from mxfusion.inference import create_Gaussian_meanfield

q = create_Gaussian_meanfield(model=m, observed=[m.Y])

print(q)

s_hat ~ Normal(mean=Variable(987b5), variance=Variable(bf47c))

mu ~ Normal(mean=Variable(9c88b), variance=Variable(3ce74))

Then, we created an instance of StochasticVariationalInference with both the model and the variational posterior. We also need to specify the number of samples used in inference, as it uses the Monte Carlo method for approximating the integral in the variational lower bound. The execution of inference follows the same interface.

[12]:

from mxfusion.inference import StochasticVariationalInference

infr = GradBasedInference(inference_algorithm=StochasticVariationalInference(

model=m, posterior=q, num_samples=10, observed=[m.Y]))

infr.run(Y=mx.nd.array(data, dtype='float64'), learning_rate=0.1, verbose=True)

Iteration 201 loss: 234.52798823187217

Iteration 401 loss: 231.30663180752714

Iteration 601 loss: 230.14185436095775

Iteration 801 loss: 230.02993763459781

Iteration 1001 loss: 229.6937452192292

Iteration 1201 loss: 229.72574317072662

Iteration 1401 loss: 229.65264175269712

Iteration 1601 loss: 229.67285188868293

Iteration 1801 loss: 229.52906307607037

Iteration 2000 loss: 229.64091981034755

Let’s check the resulting posterior distribution.

[13]:

mu_mean = infr.params[q.mu.factor.mean].asscalar()

mu_std = np.sqrt(infr.params[q.mu.factor.variance].asscalar())

s_hat_mean = infr.params[q.s_hat.factor.mean].asscalar()

s_hat_std = np.sqrt(infr.params[q.s_hat.factor.variance].asscalar())

s_15 = np.log1p(np.exp(s_hat_mean - s_hat_std))

s_50 = np.log1p(np.exp(s_hat_mean))

s_85 = np.log1p(np.exp(s_hat_mean + s_hat_std))

print('The mean and standard deviation of the mean parameter is %f(%f). ' % (mu_mean, mu_std))

print('The 15th, 50th and 85th percentile of the variance parameter is %f, %f and %f.'%(s_15, s_50, s_85))

The mean and standard deviation of the mean parameter is 3.120117(0.221690).

The 15th, 50th and 85th percentile of the variance parameter is 4.604521, 5.309114 and 6.016289.

The true parameter sits within one standard deviation of the estimated posterior distribution for both the mean and variance parameters. The above error gives a good indication about how much we could trust the parameters that we estimate.